Behaviour Driven Unit Tests

"If we change the implementation details, none of our tests should break" - Ian Cooper

This blog comes following a work chat around one of the best tech talks I've watched; TDD: Where Did It All Go Wrong by Ian Cooper

Stop me if you've heard this one before:

You have decent unit test coverage of your application / component, however:

- Every time you refactor the structure of your code, without changing the behaviour, 3 things happen:

- You get multiple compile errors in your test project as now as you're passing new dependencies into the tested classes constructors

- On fixing the tests; they now fail, as your new dependencies aren't mocking the new behaviours in the expected fashion

- You update your tests, cautious that you have to maintain their validity whilst adding new mock behaviour

- After refactoring you now have to either move or create new tests against the new code structure

- Sometimes you find you are writing tests that cover internal parts of the code, focusing on that over the actual expected behaviour

- Especially true if you're testing a skinny Controller is correctly calling a Manager/Service

- You see your tests frequently using

.Verify()to ensure the Controller has passed down values - So the "behaviour" under test is that code is calling code and passing the correct properties...

- You have 1 test fixture per public class in your system

- Each fixture contains a cumbersome number of tests covering that class entirely

- Again refactoring that class without changing the behaviour requires you to update, rewrite or create entirely new tests

- When writing tests you constantly have the class under test open and treat it as a "white box", rather than focusing on the behaviours you expect in "black box" fashion

- You frequently struggle understanding whether a test is broke because the logic is wrong, or because the mocking is wrong (and it's usually the latter)

In his talk Ian argues two important points when considering where unit testing has gone wrong: firstly that we are testing the operations in classes / methods rather than the behaviours of the system, and secondly that our tests have become strongly coupled to the implementation detail.

High unit test coverage should give us the confidence to refactor the system, knowing that if we break the behaviour the tests will tell us. Instead this coverage in an impediment, it slows down development by adding cost to every refactoring exercise we do.

Deeper Dive Into The Issue

Let's say we have an AddressController which is responsible for getting the address of a user from the database

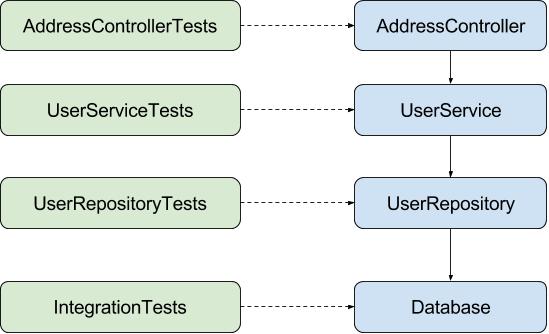

In most companies I've worked unit tests are written like this:

Each class is tested separately, with it's dependencies mocked out, so AddressController would have a mock UserService, the service would have a mocked UserRepository and so on.

The advantage here is that when writing tests we can focus on the logic contained in each separate class, with a view that by testing each piece behaves as expected we will have a higher degree of confidence that the system works when fit together, assuming we also apply prudent integration & system testing.

However in this approach lies the biggest issue, each test fixture is coupled completely, even in name, to the implementation detail.

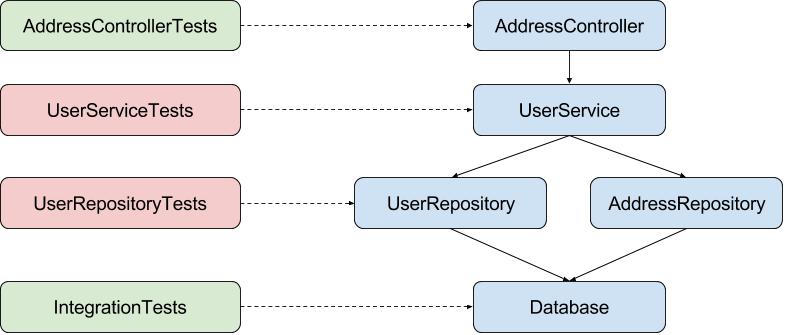

In this scenario, let's say I think getting an address shouldn't really belong in the UserRepository, it should have it's own repository called AddressRepository. So I refactor the relevant methods out, what happens next?

The UserServiceTests won't compile as the UserService now depends on a new class AddressRepository; whilst the UserRepositoryTests fail because the methods it use to test have been moved to the AddressRepository. To fix this I have to:

- Add a mocked

AddressRepositoryto theUserServiceconstructor inUserServiceTests - Make sure the mocked repository is returning the correct details to make the tests pass

- Moved the tests in

UserRepositoryTestsassociated with addresses to a new test fixtureAddressRepositoryTests

It's important to note here that at no point have I changed the behaviour of the system, yet a simple refactoring of splitting a repository has required a sweeping set of changes to my tests.

Surely there's a better way?

The Better Way

Frankly my tests shouldn't give two shits how the code works under the hood (white box), all it should care about is how the system is expected to behave at it's extremities (black box).

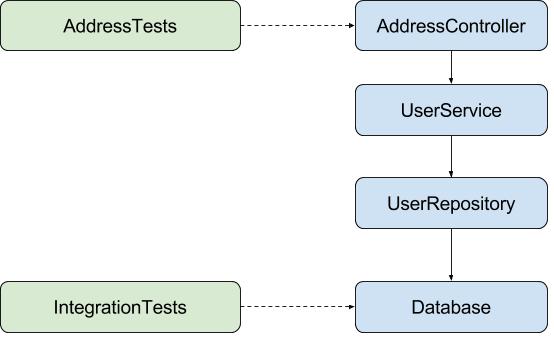

We start by decoupling the tests from the implementation detail, now we only have 1 test fixture called AddressTests which is responsible for testing the AddressController right down to the next external dependency:

The AddressTests in this scenario should mock 1 thing and 1 thing only; the database. Everything else should be a concrete implementation (more on achieving this in a bit).

The tests themselves should then be concerned with setting up the database mock with the test data required to service a request to the AddressController. If this is a CRUD endpoint the tests would also verify this data is correctly modified by the requests at the database level.

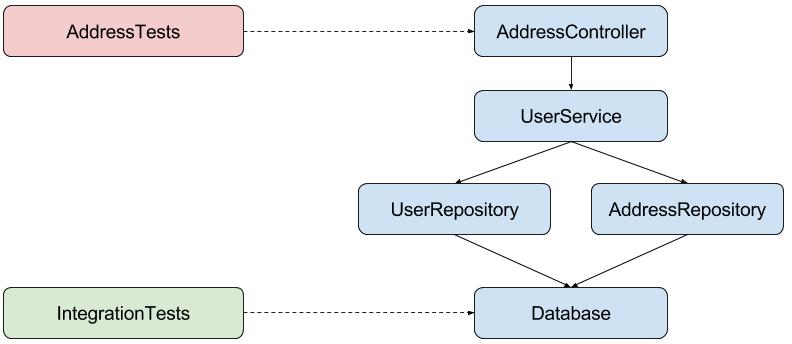

What happens when we refactor again to add our AddressRepository?

AddressTests still has a compile error, we've added a new dependency AddressRepository that needs to be passed in to the AddressController. However now to update the tests we only have that single step of adding a concrete implementation to the controller in the tests, everything else stays the same.

This gets us closer to the "holy grail" of unit tests, where the tests are protecting us from unintended behaviour change whilst letting us freely change the implementation detail underneath.

The Even Better Way

We're not quite free of implementation detail yet, there is still one more step that will allow us to refactor freely without touching the tests.

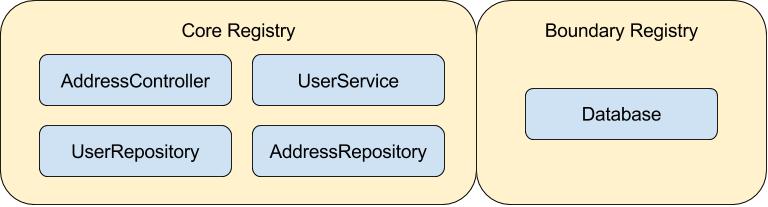

Starting with an IOC container; we need two registers / installers for our system:

The Core Registry defines the internal logic of the component and should be accessible to both the component and test projects; everything in here will be provided as a concrete implementation to the tests.

The Boundary Registry defines the external dependencies of the system; so I/O, logging, API clients, databases etc. This should be accessible to the component project only.

The test project then defines it's own registry that provides mock versions of the external dependencies for all tests.

Now when we refactor code internal to the component the tests should remain unchanged, as they are using the same DI registry as the application.

Using This In Practise

We implemented this approach at Careflow Connect having hit a lot of the issues stated earlier. As the company grew and the software became more complex we wanted to refactor and restructure more frequently, yet found working through the treacle of strongly coupled tests, that in places seemed to have little value, a constant frustration.

A few things happened when we moved over to behaviour driven unit tests of note:

-

We ended up with libraries of tests that focused heavily on the expected behaviours of the system whilst providing more value as each test covered all the code from the edge of our public interface (controller level or the edge of a client library) down to the external dependencies.

- This lead to suites of tests that looked and operated like feature / system tests without the overhead of running in Selenium and persisting test data in a concrete database.

- Further to this the tests felt "purer" as they only tested the inputs and outputs of the public interface along with the side effects of any dependencies.

-

It really works! Refactoring becomes much easier as the tests give you the safety net of knowing if you've broken the expected behaviour. We could replace all the internals but so long as we maintained the external contract and outputs to external dependencies the tests required no extra work.

-

Setting up test data gets harder, much much harder.

- When isolating a single class, assuming you've heavily applied SRP, it's quite easy to mock the responses required for methods to function. When you're dealing with concrete implementations right down the stack, you start to have to add all the data required for the flow to work normally.

- In our example of a

AddressTestswe may have to not only add an address to the mocked database, but ensure any authentication against the user is provided, the user has the correct priviledges to perform CRUD operations etc. - Dependent on the complexity of the component under test this could get very large (think if you were testing a

SearchControllerthat aggregated lots of data from different sources) - To help with this, it's best to build a set of Test Data Helpers responsible for creating various states in your system. The encourages reuse in tests and can employ the builder pattern for fluent readability

-

Using a DI container slowed the tests down

- I believe this may have been as we registered dependencies using reflection rather than explicit declarations

- Worth bearing in mind though you are now handling a DI container for each test invocation

-

There is still value in writing unit tests at unit level (1 to 1 mapping to a class)

- This works well for any classes with complex logic where you want to test all edge cases without having to build a large set of test data

- For example; validators, algorithms, static helper methods etc

Next Steps

- Watch Ian Cooper's Talk - TDD: Where Did It All Go Wrong

- A few great follow up blog entries (cheers for the links Alastair)

- Watch Microtesting: How We Set Fire To The Testing Pyramid While Ensuring Confidence (cheers Joseph)

Please let me know what you think of the blog, did I cover it in enough detail? Is there anything I've missed?